From Black Box to Verifiable AI: Story Protocol and EigenCloud’s Shared Vision

Co-authored by Story Protocol and EigenCloud

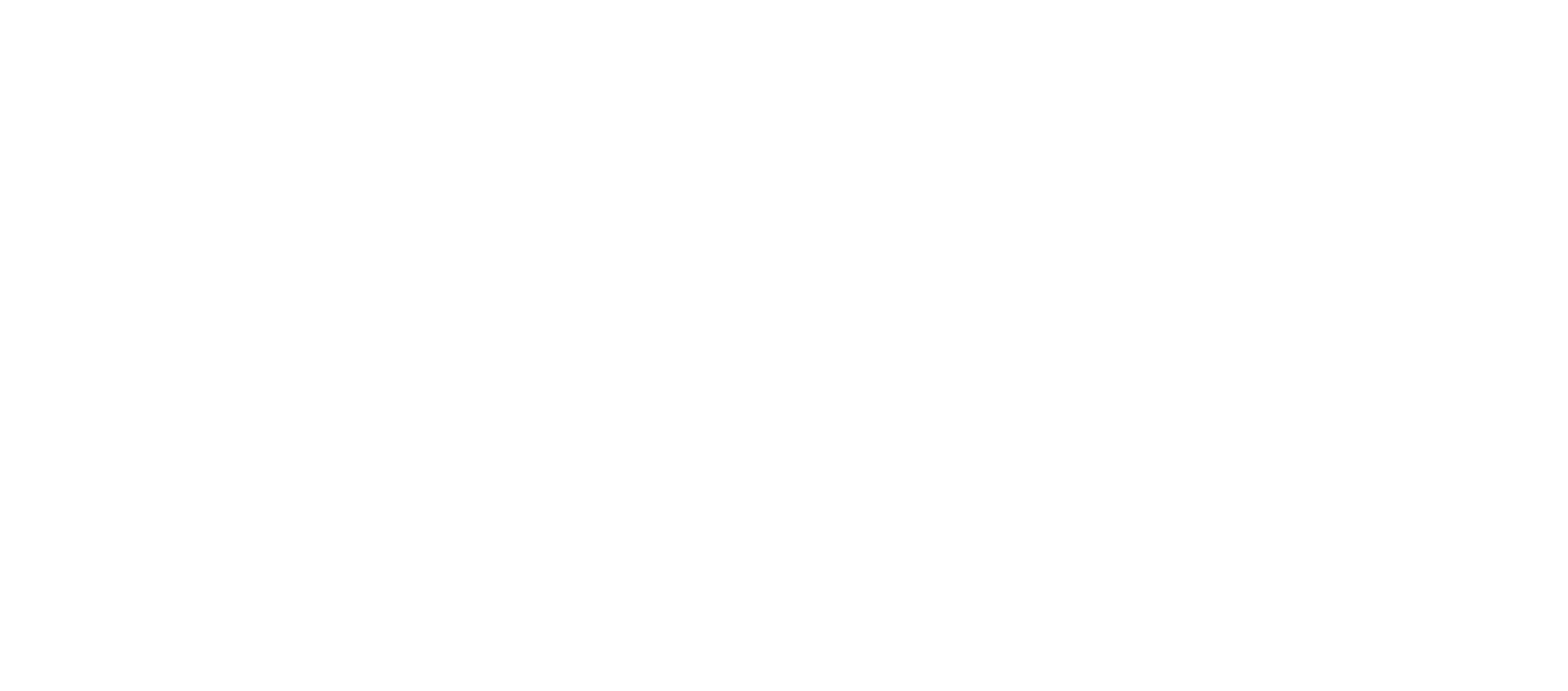

Today, AI is a black box. The singular problem is verifiability: no receipts for what data went in, what code ran, or who holds the rights. An artist sees her style reproduced with no shared record of whether her work was in the training set or under what license. An engineer watches performance get worse with no proof whether the underlying model was swapped, quantized, or filtered. At scale, that gap forces everyone to trust incumbents by default, concentrating power and value in the platforms that own the box. We need proof that doesn’t require a trusted gatekeeper.

Crypto is the great equalizer of trust. Anyone can build, and anyone can verify, because verification is permissionless. Reputation gives way to commitments and proofs: content hashes that fix origin, provenance graphs that track derivatives, and attestations (secure hardware or zero‑knowledge) that bind code to inputs. Policy becomes program; licenses turn machine‑enforceable; payments and splits settle onchain with receipts. Verification becomes cheap and neutral, letting small actors check the powerful and machines check each other. Decentralization distributes control; verifiability makes that distribution meaningful.

But decentralization alone doesn’t get you there. BitTorrent proved that distribution is useful but not valuable. Bitcoin proved that verifiability is what makes a network valuable. The stack that wins AI isn’t the one with the cheapest GPUs or the biggest API surface. It’s the one that can prove the most about where data came from, how computation ran, and who deserves to be paid.

Verifiability is the bridge to something bigger: sovereignty. Once intelligence is verifiable, it can stand on its own terms. Not a monoculture of boxed‑up B2B SaaS, but a plural society of agents with distinct styles, goals, and economics. Think poets and traders, builders and explorers, each with policies, rights, and wallets, composing one another in public. That world is livelier, fairer, and harder to counterfeit.

Here’s the frame we’re advancing:

- Provenance: sources, licenses, and attribution are programmable and auditable.

- Execution: training and inference produce attestations, not assertions.

- Settlement: usage, royalties, and policy enforcement clear on‑chain with receipts.

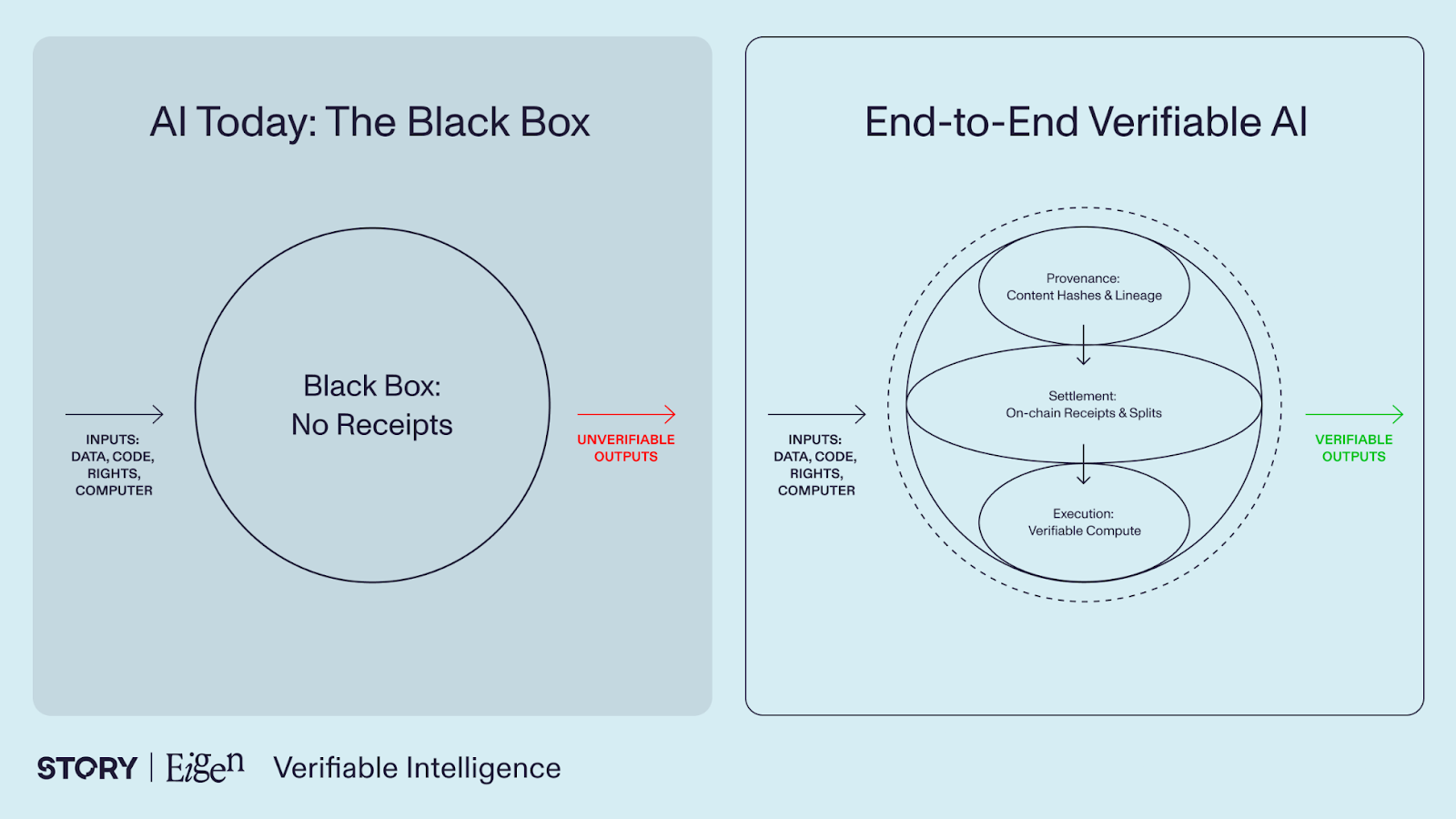

Story provides programmable provenance and licensing for data, models, and remixing with IP. EigenCloud provides verifiable execution for storage and compute. Story and Eigen team up to make intelligence verifiable end to end, so contributions can be priced, rights can be respected, and agents can be sovereign.

Verifiability scales the system. Sovereignty makes it worth building. This is how AI stops being a black box and becomes the foundation for a verifiable economy of accountable and open intelligence that will usher in a new market of Crypto x AI.

What Verification Unlocks for AI

Combining decentralized compute with decentralized data/IP unlocks concrete capabilities that today’s centralized AI cannot easily achieve.

First, it enables proof-of-origin for data and models. In practice, content (datasets, model outputs, etc.) can be registered at the source onchain, with cryptographic provenance tracking who created it and when. These artifacts come wrapped in programmable licenses that define how they can be used or remixed, and every usage can be metered and automatically trigger royalty payments to the rightful contributors. This means AI developers and data providers finally have a transparent way to license and get paid for their work, rather than sending data into a black hole.

Second, decentralization allows efficient coordination of resources and data on an unprecedented scale. Massive volumes of training data and vast computing power can be pooled from distributed sources through cryptoeconomic incentives. Instead of a single company hoarding data or compute, many independent parties can contribute pieces (whether it’s a chunk of a dataset or a slice of GPU time) and be rewarded for honest service. This opens the door to near-boundless AI capacity that scales as a function of community participation, rather than corporate capital expenditures.

Third, we get proof-of-processing for model training and inference. AI computations can be run over a decentralized network of operators (for example, nodes in a compute marketplace like Eigen’s) with guarantees that the code was executed correctly on the intended data. Techniques like restaking (using staked crypto as a security deposit) and secure hardware or zero-knowledge proofs allow these operators to produce verifiable attestations of each computation. In other words, anyone can trust the results without trusting any single compute provider; the network provides cryptographic proof that a model was trained on certain data with certain parameters, or that an inference was performed correctly, all without centralized oversight.

Finally, this architecture bakes in proof-of-personhood and native payments as first-class features. Decentralized identity solutions can distinguish humans from bots (e.g. one-human-one-proof systems) to ensure that AI inputs come from real people when needed. At the same time, AI agents themselves can be given onchain wallets and identities, so they become economic actors. These agents can autonomously send and receive microtransactions paying per API call or per dataset access in stablecoins, and enforce usage policies via smart contracts. This means an AI agent could, for instance, buy access to a data stream or invoke another model’s API on its own, with all payments and license checks handled automatically on the blockchain.

Together, these capabilities form a shared accountability fabric for AI. Every key event in the AI lifecycle: who contributed which data or model, who processed it, under what terms, and who ultimately gets paid, is recorded and enforced onchain. This fabric promises an AI ecosystem that is far more transparent and fair than today’s status quo.

Enabling Agentic Commerce

If we zoom out, what do these decentralized capabilities really enable? They allow AI agents to become economic participants in their own right. In an era of autonomous services and AI-driven agents, we’re heading toward what the Story’s team framed in Agent TCP/IP, a state where autonomous agents discover, negotiate, and compose services with each other much like computers discover and exchange data over the internet. But realizing this vision of agentic commerce (i.e. commerce conducted by/among AI agents) requires a new breed of cryptographic contracts and on-hain infrastructure. For this to work, agents need:

- Licensed OnChain Skills: Models, datasets, APIs, and prompts published onchain with embedded licenses and permissions.

- Verifiable Execution: Systems like EigenCloud offer cryptographic attestations proving that services ran correctly on specified inputs.

- Instant Settlement: Agents pay each other per API call or data access using stablecoins, enforced by smart contracts.

This enables trustless coordination. An agent needing a translation service can discover a model, check its provenance and terms, pay per call, and verify output—all without human intervention.

The Verifiable Intelligence Stack

So what will it take to implement this vision? We need a cohesive stack that brings together identity, data, compute, and payments into a verifiable whole. We can think of this as the Verifiable Intelligence Stack – a reference architecture for decentralized AI systems. Its layers include:

Identity & Context: Verified identity for humans and AI agents. Prevents Sybil attacks and enforces geo-fencing or allow-lists.

IP Registration & Licensing (Story): Assets registered with provenance and programmable license terms (e.g., non-commercial use only). Rules are enforced onchain.

Data Plane: Datasets, model weights, logs are content-addressed, access-controlled by smart contracts according to license terms. This can be stored in EigenDA, can make use of EigenDA's upcoming 1 GB/s throughput.

Compute Plane (EigenCloud): operators provide secure, verifiable compute. Execution proofs provide verifiability.

Payments & Accounting: Stablecoins settle payments. Smart contracts split revenue fairly across contributors.

Observability & Compliance: Onchain logs and policies enable real-time auditing, preventing misuse and ensuring accountability.

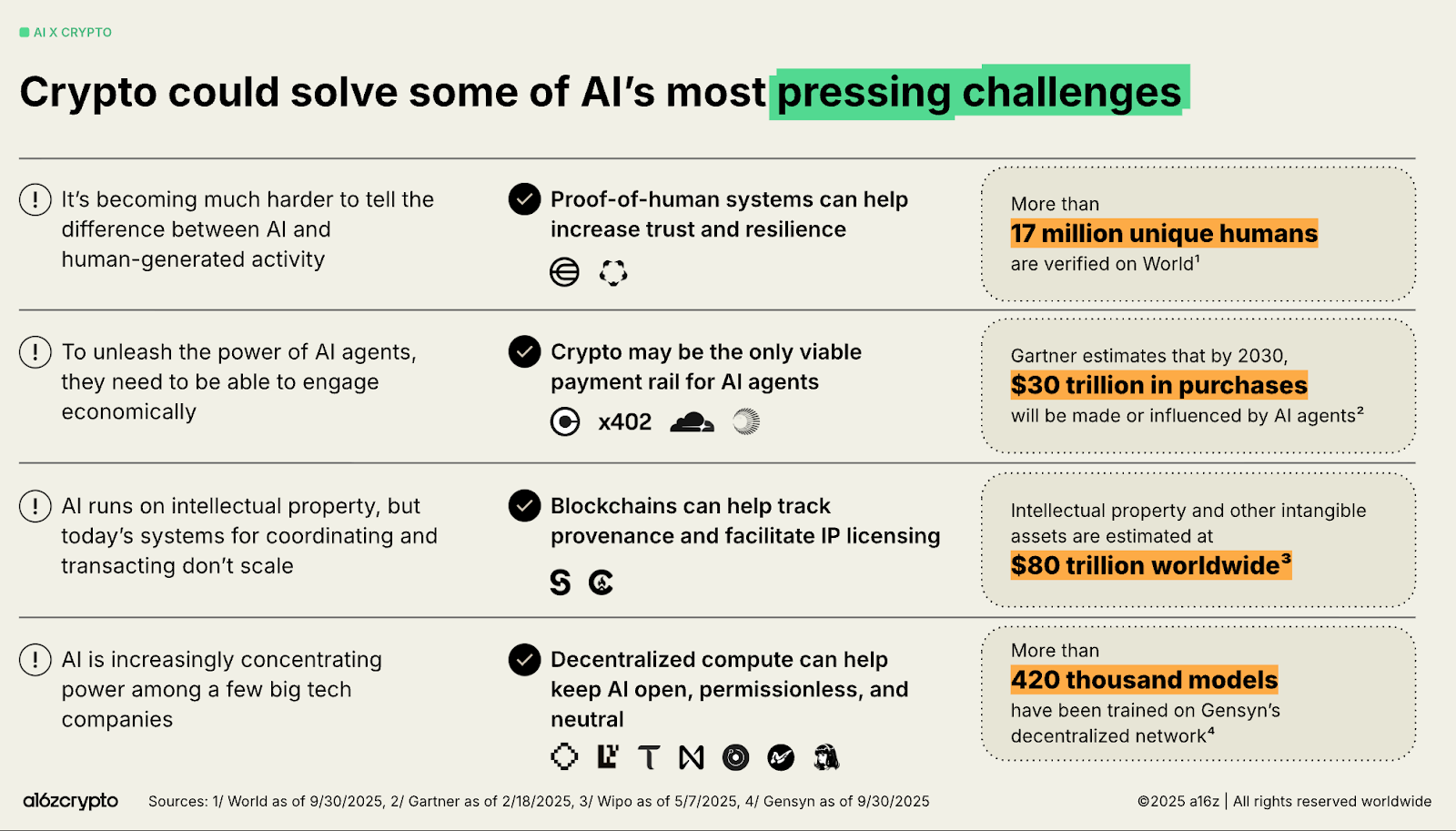

All of these layers work in concert as the rails for verifiable AI. Notably, this stack directly addresses key pain points that industry observers have highlighted in the AI+crypto convergence. For instance, Andreessen Horowitz’s crypto research team recently underscored the need for better proof-of-human systems, onchain agent payment rails, robust IP provenance tracking, and alternatives to centralized AI infrastructure. The verifiable intelligence stack is intentionally designed to meet these needs head-on. It provides the identity, payment, and provenance scaffolding that AI applications have been missing, while also countering the drift toward centralized control over AI compute. In doing so, it lays a foundation for the next era of AI development to be far more open and accountable.

Applications in Motion

Many pieces of this vision are already being built. A number of early projects and use cases are demonstrating how decentralized compute and IP can work together in practice. Here are four concrete examples in domains ranging from data crowdsourcing to gaming that show verifiable AI in motion:

Poseidon: Crowdsources massive datasets, which are validated and processed by AVS networks. Model training and quality checks occur with full onchain traceability. Contributors earn royalties.

OpenLedger: Enables IP-safe fine-tuning. Licensed base models and datasets are combined with cryptographic proofs that training respected license constraints. Payments and credits are automatic.

Verse8: In decentralized gaming, world simulation and NPC logic run on AVS networks. All user-generated content is registered on Story. In-game assets are truly owned, licensed, and monetized by players.

Verio: Offers decentralized IP enforcement. AVS validators analyze model behavior and outputs for license violations. Misuse triggers slashing of collateral. This enforces compliance preemptively.

These projects solve real-world problems with verifiability, showing decentralized AI is already taking root.

Why This Matters

Stepping back, why does all of this matter? Because the stakes for getting AI’s next chapter right are enormous both economically and technologically. Here are a few big-picture reasons the decentralized approach to AI is paramount:

Economic Gravity: Data and IP are an $80T+ asset class locked in static contracts. Onchain licensing turns them into liquid assets.

Agent Demand: Machine customers are growing fast. They need autonomous systems to license, transact, and verify.

Neutral Compute: Centralized AI compute leads to control risks. Decentralized compute provides open, censorship-resistant alternatives.

Proof over Trust: With attestation and onchain receipts, trust is replaced by cryptographic verification. This improves transparency, fairness, and regulatory readiness.

Early networks show it works: decentralized AI platforms have run hundreds of thousands of jobs using community compute, proving that when incentives and verification align, open systems can scale.

Story × EigenCloud: Building Together

Story and EigenCloud are combining forces to make this real. We’re working in tandem to ensure that these pieces seamlessly interconnect. Our shared vision is that Story’s programmable IP and data provenance becomes the default policy layer for AI (governing what’s allowed, how assets can be used, and who gets compensated), and EigenCloud’s verifiable compute becomes the default execution and enforcement layer that actually runs the AI workloads under those rules. In practice, this means whenever someone is building a decentralized AI application, whether it’s a new model, a dataset market, or an agent platform, the norms and standards from our protocols will be readily available to handle the hard parts of trust and compliance.

Think: Onchain AI skills, priced and licensed by default, available to any agent. Developers compose services with baked-in trust and revenue sharing. Agents transact, invoke, and verify automatically. It’s a programmable open marketplace for AI.

This piece is a jointly authored exploration by Story Protocol and EigenCloud. It reflects our shared belief that the future of AI depends on verifiability, from how data is sourced and licensed, to how models are trained, to how agents transact and enforce policy. Neither of our teams believes this transformation can be accomplished by a single protocol or a single company. It requires a coordinated architecture that brings together programmable provenance, decentralized execution, and cryptoeconomic settlement.