How SoraChain AI Uses EigenCompute to Enable Global Federated Learning Infra That Actually Scales

Eli Lilly's TuneLab and SWIFT's banking experiments proved federated learning works. Both stayed stuck at pilot scale in small trusted circles. The reason: data diversity drives robust AI models, but current deployments lack distributed trust, verifiability, and operational scalability.

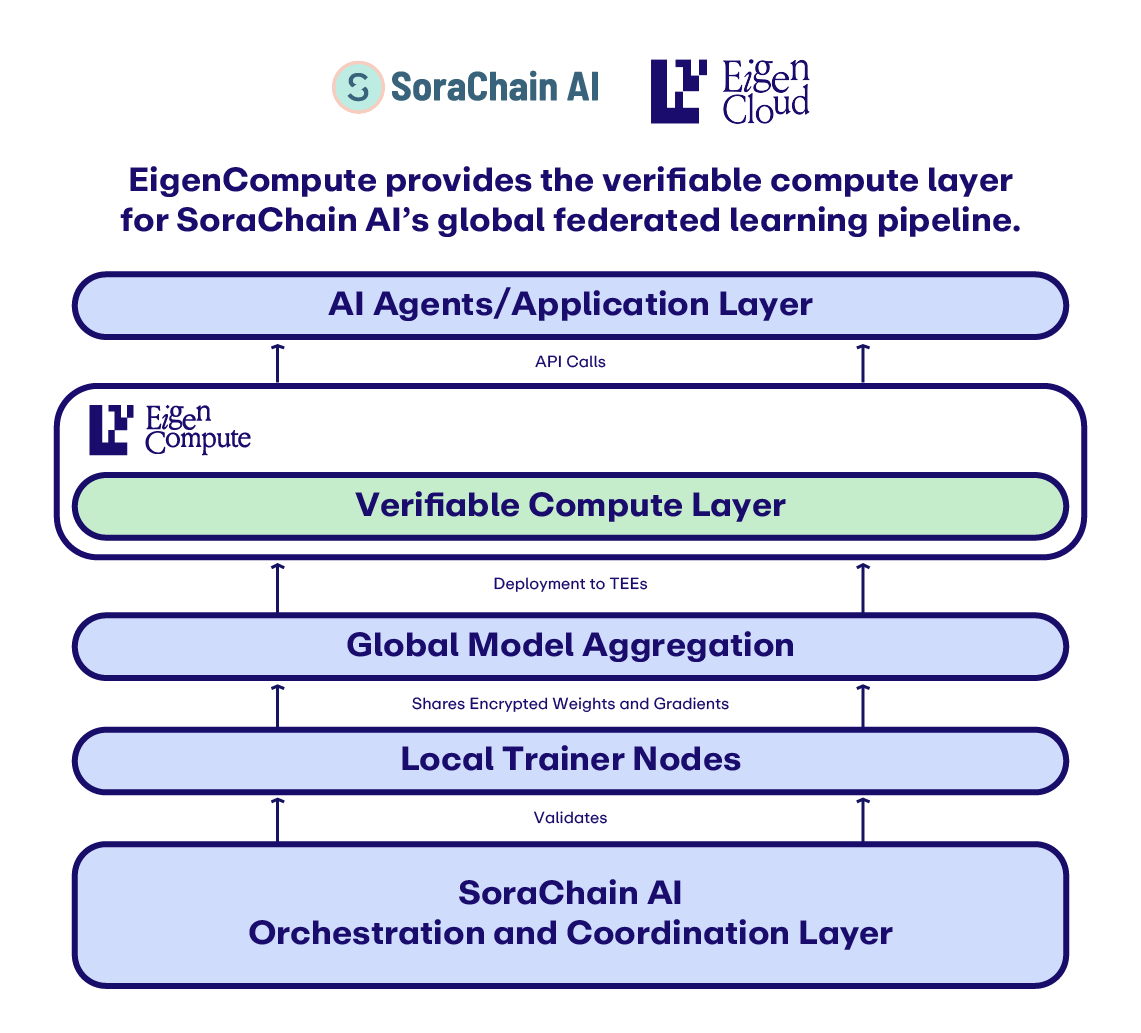

EigenCompute solves this with TEEs and attestation, enabling trustless, cross-border federated learning networks that train production-grade global models. This case study shows how SoraChain AI turned that vision into reality.

What is SoraChain AI?

SoraChain AI builds federated learning infrastructure that enables organizations to collaboratively train AI models without exposing sensitive training data. Their architecture is fully composable; teams can mix TEEs, MPC, zk-proofs, or FHE based on their security requirements.

Their first production deployment using EigenCompute targets an unexpectedly consumer-friendly use case: brand influencers training Instagram caption models across their own devices. Training data never leaves individual phones. Only encrypted model updates get shared. The result is privacy-safe, real-time insights for partner brands without exposing any raw user data.

But the real vision extends far beyond Instagram captions. SoraChain AI is building the infrastructure layer for what they call "Personalized Intelligence,” AI models trained on global datasets while respecting data sovereignty, regulatory boundaries, and individual privacy.

The Challenge

Federated learning requires a trusted aggregator to combine model updates from multiple participants. Traditional solutions face a fundamental trust and coordination problem:

- Centralized Aggregators: Require participants to trust a single entity with their encrypted model updates

- No Verifiability: Participants cannot verify that aggregation was performed correctly

- Privacy Risks: Even with encryption, centralized aggregators become high-value targets

- Regulatory Compliance: Healthcare, finance, and other regulated industries need auditable, tamper-proof aggregation and cannot conduct cross-border training

- Blockchain Limitations: Onchain aggregation is prohibitively expensive, costing millions in gas fees

These problems create a hard ceiling on federated learning deployments. Without provable trust, federated learning cannot scale beyond a handful of nodes. Global training and inference requires hundreds or thousands of participants across borders and regulations, but current solutions cannot deliver that.

The Solution

SoraChain AI found their answer in EigenCompute's managed TEE infrastructure.

What is EigenCompute?

EigenCompute is a verifiable offchain compute service that allows developers to run complex agent logic outside smart contracts while maintaining onchain security guarantees. The mainnet alpha release lets developers upload application logic as Docker images, which execute inside secure Trusted Execution Environments (TEEs).

TEEs provide hardware-backed isolation—code runs in a protected enclave that even the host operator cannot access or modify. Intel TDX delivers cryptographic attestation proving both the code and execution environment remain untampered. EigenCompute handles the infrastructure complexity: TEE provisioning, key management, blockchain integration, and operational monitoring.

For developers, this means smart contract-level security guarantees without smart contract scalability limitations. Complex computations that would cost millions in gas fees become economically viable. Long-running agent workflows that would timeout onchain become practical.

Why SoraChain AI Chose EigenCompute

EigenCompute solved the core infrastructure challenges that would have otherwise taken SoraChain AI 6-8 months to build from scratch.

Hardware-backed security was non-negotiable. Intel TDX TEEs provide real hardware isolation with cryptographic attestation that proves code and environment integrity. No reliance on operators or trust assumptions – EigenCompute is the only platform offering this level of verifiable security today.

Developer experience accelerated the timeline dramatically. The intuitive ‘devkit avs’ workflow enabled same-day deployments, while clear documentation and responsive support reduced debugging overhead. What would have been weeks of testing became hours.

Native onchain integration eliminated months of blockchain development work. TEE-generated keys simplified secure key management, seamless smart-contract interaction optimized gas patterns, and multi-chain support for both EVM and Solana came out of the box.

Cost efficiency made the economics viable. Fully managed infrastructure with pay-as-you-go compute eliminated the need to operate TEE hardware, handle maintenance, or manage patching. The alternative—building a custom stack—would have cost 6-8 months of engineering time.

Production readiness meant no compromises. High reliability with 100% uptime in testing, built-in monitoring and attestation for operational visibility, and the ability to scale to 10-100+ clients without custom infrastructure. Transparent isolation guarantees gave SoraChain AI confidence to deploy sensitive workloads immediately.

With EigenCompute handling the infrastructure complexity, SoraChain AI could focus entirely on building its federated learning engine.

How SoraChain AI Implements EigenCompute

The integration centers on four components:

- AI Model Aggregation runs inside EigenCompute TEEs, securely combining model updates from distributed clients. The TEE environment ensures aggregation logic executes exactly as specified, with cryptographic proof.

- Smart Contract Registry maintains an onchain record of all aggregated models, training rounds, and inference results. This creates an immutable audit trail for regulatory compliance.

- Attestation Layer provides cryptographic proof that aggregation code runs unmodified inside genuine TEE hardware. Participants can verify execution integrity without trusting SoraChain AI.

- Privacy Guarantees ensure training data never leaves client devices. Only encrypted gradients get shared, aggregated inside the TEE, and recorded onchain.

With this infrastructure deployed in under two weeks, SoraChain AI moved from building infrastructure to measuring real-world impact.

Results

Since integrating EigenCompute, SoraChain AI has achieved transformative results across technical execution, business impact, and market positioning.

Technical Achievements

The system is production-ready with 5+ federated learning clients actively participating, 50+ training rounds completed, and 1,000+ inference requests processed – all with zero security incidents and 100% uptime.

Performance metrics demonstrate real-world viability:

- Aggregation latency: 2-5 minutes per round (10-20 clients)

- Model convergence: 15-30 training rounds depending on use case

- Inference throughput: 10-20 requests per minute

- Attestation overhead: Under 100ms per operation

- Onchain gas usage: 80K-150K gas per aggregation round

Model quality matches centralized baselines across multiple domains. Content moderation achieves F1 scores above 0.85. Medical imaging models (trained on CheXpert dataset) reach AUC above 0.80. Credit scoring models deliver accuracy above 0.75.

Business Impact

Time-to-market acceleration represents the most dramatic improvement. SoraChain AI cut infrastructure build time from 6-12 months to 2 weeks. They avoided $200K-$400K in engineering costs while spending only $5K - $10K on compute in their first six months.

This speed created a competitive advantage. SoraChain AI became the only platform offering verifiable federated learning, establishing a 6-12 month market lead. Over 10 organizations are now in active evaluation, including healthcare providers and financial institutions that previously considered federated learning impractical.

Technical Validation

Security validation confirms the infrastructure works as designed. TEE attestation proves execution integrity. No data leakage has occurred across 50+ training rounds. Key management remains secure with TEE-generated keys. Network isolation prevents unauthorized access.

Reliability testing demonstrates production-grade stability. The system maintains 100% uptime with graceful error handling and automatic recovery. Real-time monitoring provides operational visibility without custom tooling.

Scalability testing proves the architecture can grow. Current deployments support 10-20 clients training models with 50M-450M parameters. Convergence matches centralized training baselines while maintaining sustained inference throughput.

Building Together

EigenCompute and SoraChain AI built verifiable global-scale federated learning infrastructure – what the industry needed but couldn't build alone. Their combined stack lets partners train models across borders without sacrificing privacy, compliance, or trust.

For developers building verifiable AI in healthcare, finance, prediction markets, or autonomous agents: the infrastructure exists. This is just the beginning of what's possible when secure compute and decentralized AI training come together.

Get early access to EigenCompute. Build your own verifiable AI application at developers.eigencloud.xyz